Raspberry Pi Smart Home Manager

ECE 5725 Fall 2023

Zidong Huang (zh445), Yuqiang Ge (yg585)

Demonstration Video

Introduction

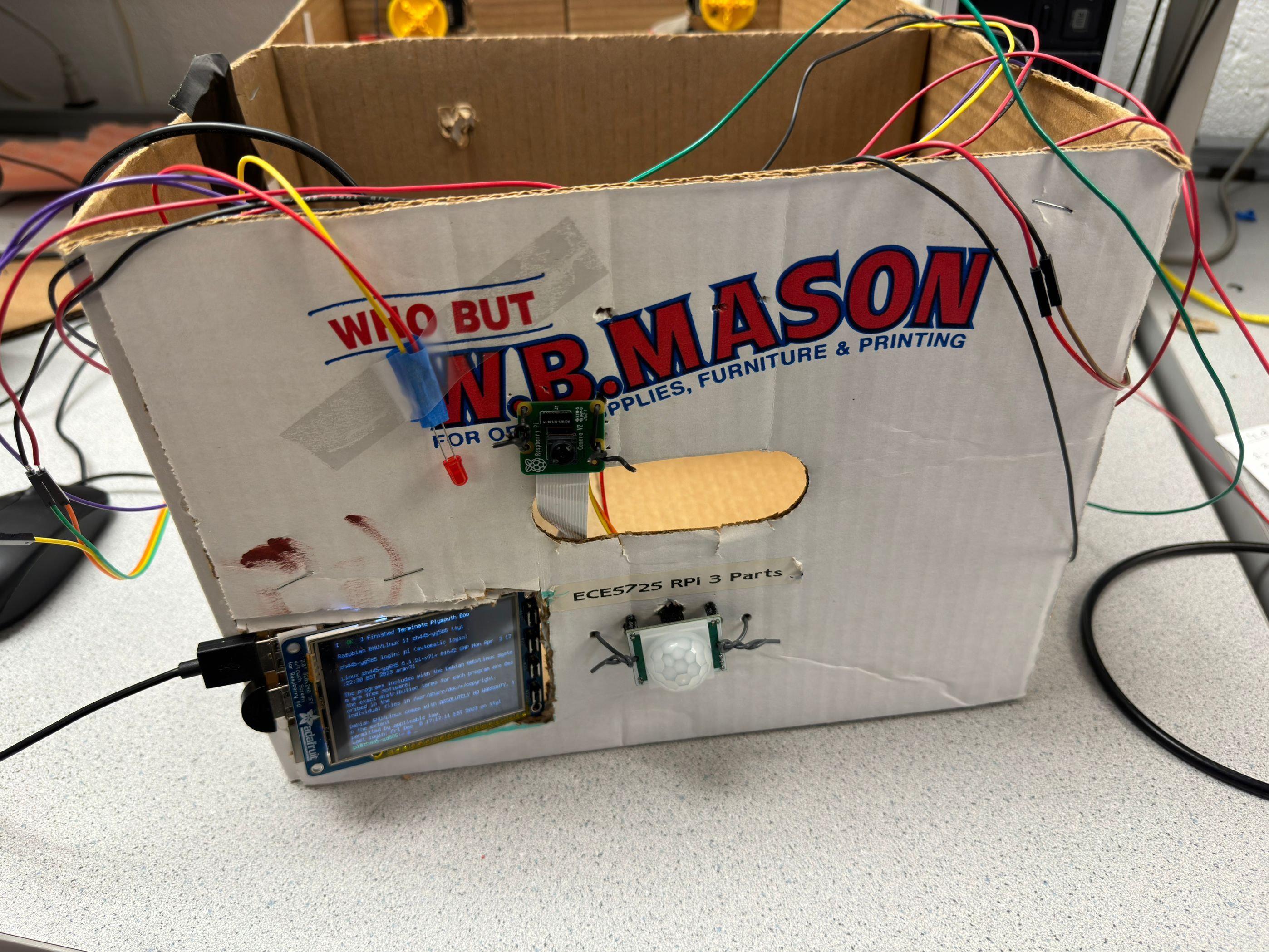

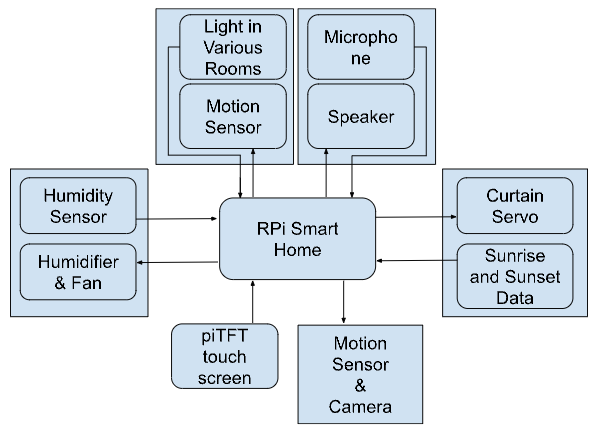

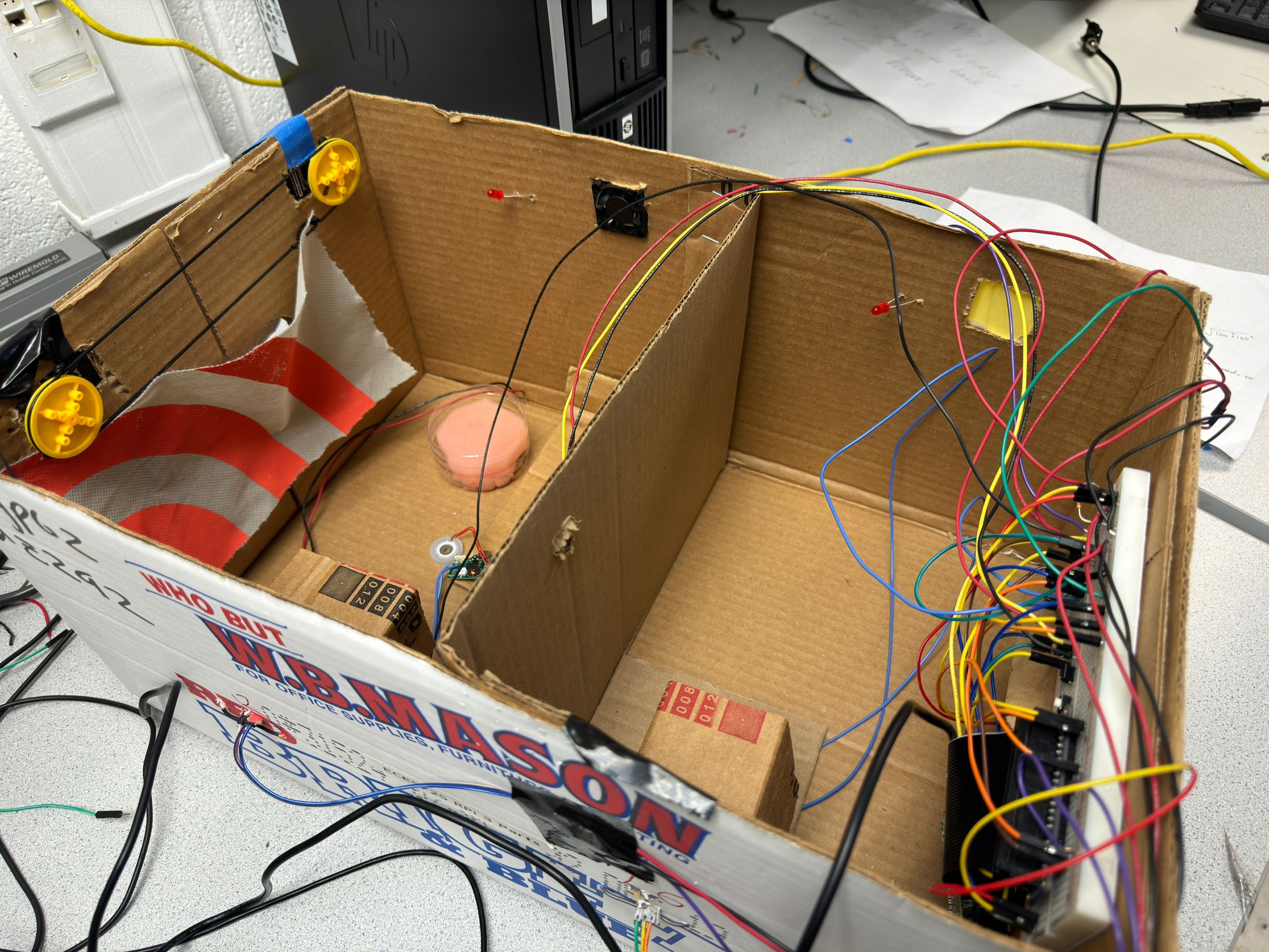

This project aims to create a multifunctional smart home manager with a range of capabilities. This system featured voice control and conversation abilities. It also incorporated human detection for automatic light switching, automatic humidity adjustment, and curtain control based on sunrise and sunset data. Additionally, it included touch control via a user-friendly touch screen interface. Furthermore, the system implemented face recognition using the Pi camera and OpenCV. Also, a comprehensive logging system was integrated to record information activities within the rooms. The smart home manager was developed and deployed on the Raspberry Pi 4B. To demonstrate its functionalities, a small-scale home environment with multiple rooms was constructed. This setup showcased the diverse features of the smart home assistant, illustrating its practical applications within a household setting.

Project Objective:

- Create a smart home manager capable of handling various tasks and functionalities.

- Add various hardware peripherals to the system.

- Implement voice control and voice response.

- Implement face recognition with opencv.

- Implementing a log system using a database.

Design

Hardware Design

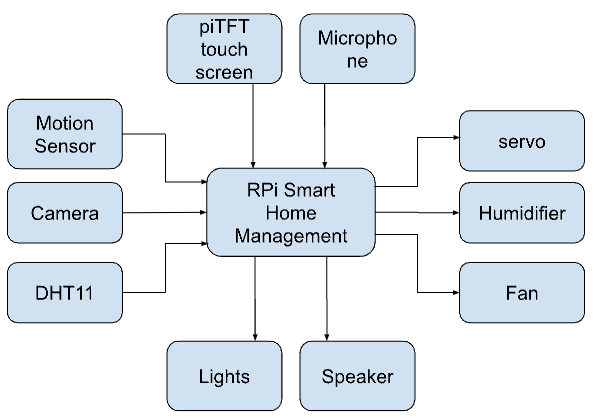

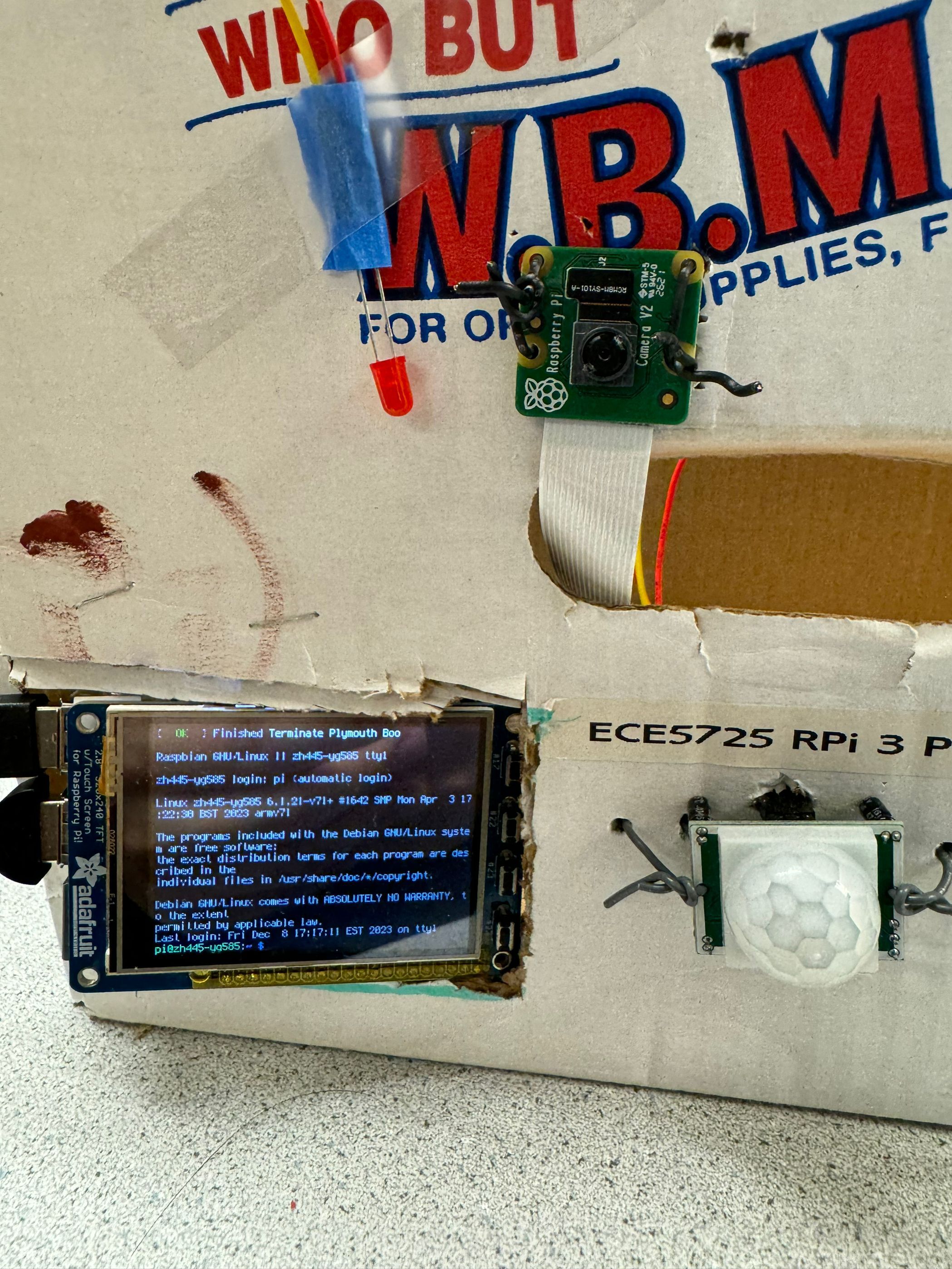

The Raspberry Pi Smart Home Manager comprises many hardware peripherals for diverse functionalities. Leveraging the capabilities of the Raspberry Pi 4B as the central control unit, we've implemented manual control via the PiTFT. The DHT11 sensor monitors the indoor temperature and humidity levels. When humidity falls below a threshold, the humidifier is activated for moisture regulation. Conversely, if humidity levels rise excessively, the system triggers the fan for dehumidification. Curtain control is facilitated through a servo motor mechanism. For occupancy detection, the PIR motion sensor identifies movement within the house. Indoor motion triggers the lights, while outdoor motion prompts the camera for photo recording and facial recognition. A microphone records the user's voice commands. The system is able to provide voice response through the speaker system. When the user's voice input is a control command, the system will control the indoor devices accordingly.

DHT11 sensor has 3 pins. The sensor's VCC pin and GND pin is connected to the Raspberry Pi's 3.3V pin and GND pin for power supply. Data out pin is connect to GPIO. Additionally, connect a pull-up resistor between the Data out pin and the VCC pin.

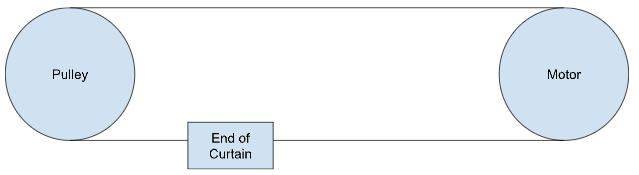

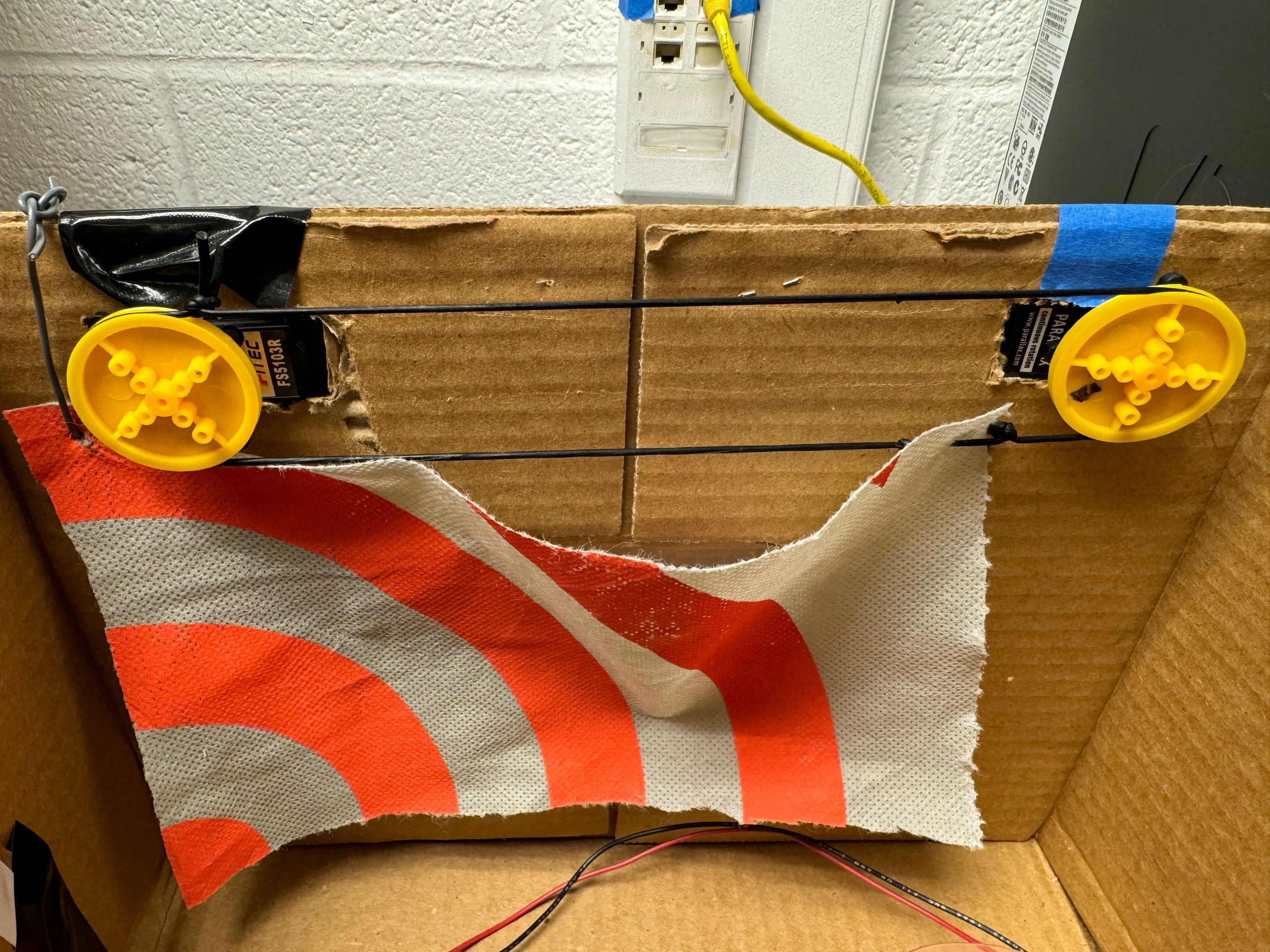

The Continuous Rotation Servo FS5103R is operated by connecting its power and ground wires to a 5V power source and ground respectively, while its control wire is linked to a GPIO pin which generate PWM signal. To calibrate the servo, adjust the PWM signal so that the output duty cycle is about 7.5%, and then adjust the calibration screw to stop the servo. This calibration process aims to find the neutral position where the servo remains stationary. After calibration, It will rotate counterclockwise when the duty cycle of the output PWM signal is 8.5%, and it will rotate clockwise when the duty cycle of the output PWM signal is 6.5%. The mechanical structure for controlling the curtain is shown below. One end of the curtain is attached to a line, which is threaded through pulley and the servo wheel. The rotation of the servo motor drives the movement of one end of the curtain, enabling the opening and closing of the curtains.

PIR Motion sensors function based on their ability to detect changes in infrared radiation within their field of view. When an object moves within the sensor's range, it causes a variation in the detected infrared radiation levels. This alteration triggers the sensor to generate an electrical signal, indicating the detection of motion. We connected the sensor's output pin to a GPIO pin to receive motion detection signals. Before using the PIR motion sensor, we need to calibrate it using two adjustment potentiometers: one for setting the delay time and the other for adjusting sensitivity. These potentiometers help regulate how long the sensor reacts to motion and the distance of movement it can detect. We adjusted the sensitivity to its highest level and set the delay time to its lowest for optimal performance. However, it's important to note that the sensor might have difficulty detecting small movements, such as waving hands in front of the PIR motion sensor, especially if the object's size is relatively small.

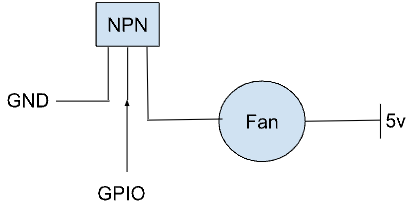

We used an NPN transistor to control the fan. Our fan has only two pins: 5V and GND. To control the fan's on-off function, we needed to use a transistor. The connections are shown in the figure below. The three pins of the NPN transistor from left to right are emitter, base, and collector. Using the base pin of the NPN transistor, we could use a GPIO pin to control the fan. When the GPIO output is high, the fan turns on; otherwise, it turns off.

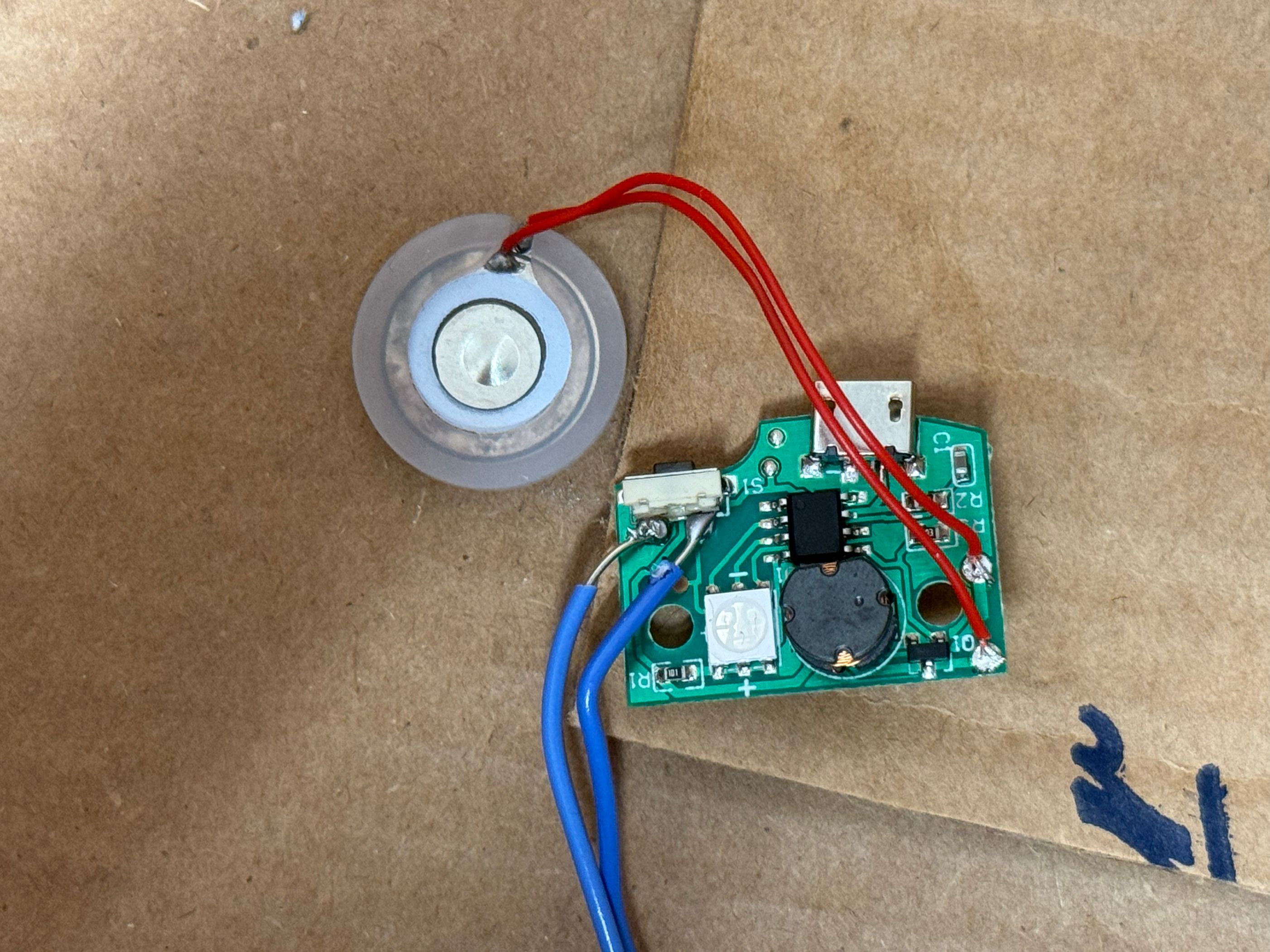

The humidifier we purchased operates using a simple switch. To control this humidifier switch using a Raspberry Pi, we needed to use an NPN transistor as a substitute switch. Initially, we removed the existing switch from the humidifier's circuit. Next, we soldered the emitter and collector pins of the NPN transistor onto the two points previously connected by the humidifier's circuit switch. Then, we connected the transistor's base pin to a GPIO pin on the Raspberry Pi. When the GPIO outputs a high voltage signal, it allows current to flow between the emitter and collector, effectively simulating the pressing of the switch. This action turns the humidifier on. Conversely, when the GPIO outputs a low voltage signal, it interrupts the current flow between the emitter and collector, simulating the switch being in the off position, turning the humidifier off. By briefly sending a high voltage signal from the GPIO pin, it's like briefly pressing the switch once, enabling us to control the humidifier's on-off operation. This setup effectively allows the Raspberry Pi to control the humidifier by using the GPIO pin and the NPN transistor as a substitute switch.

Software Design

Control System Design

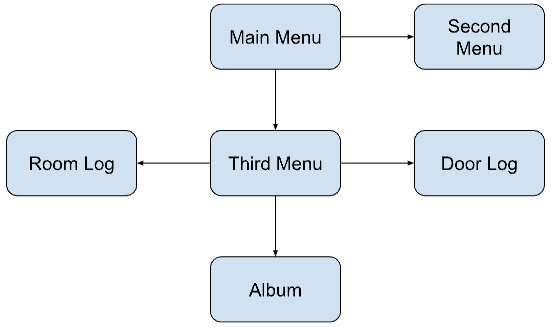

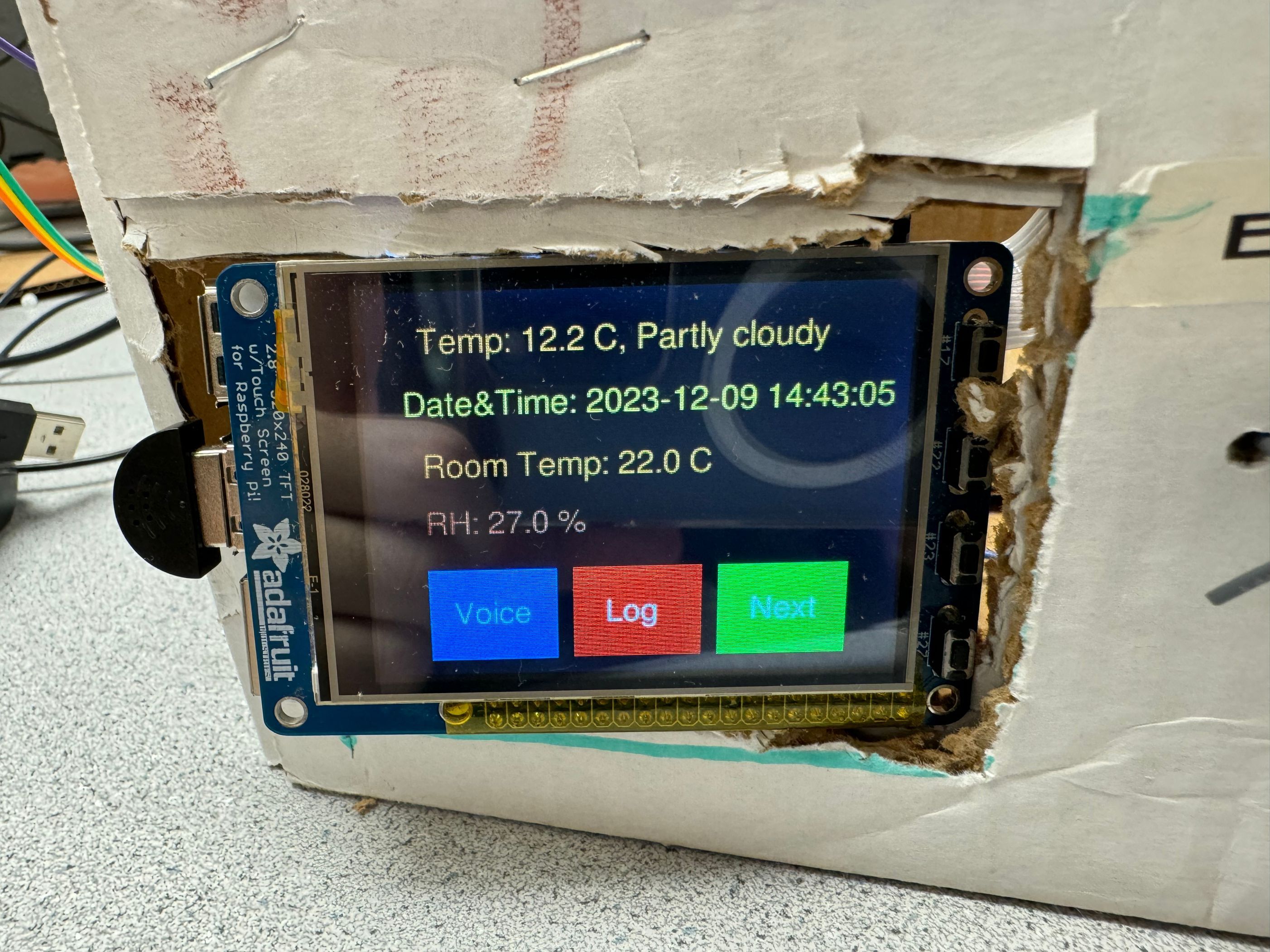

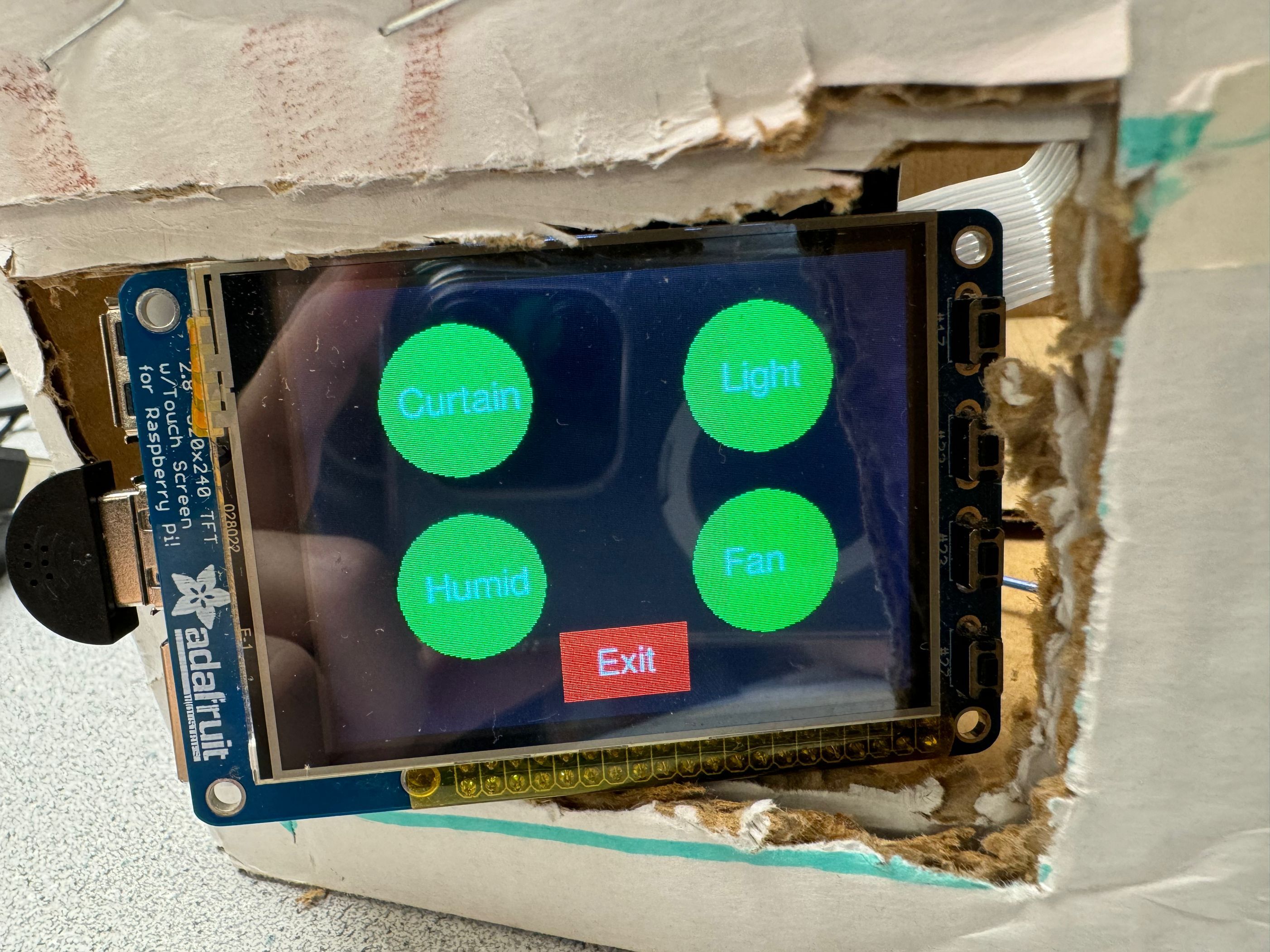

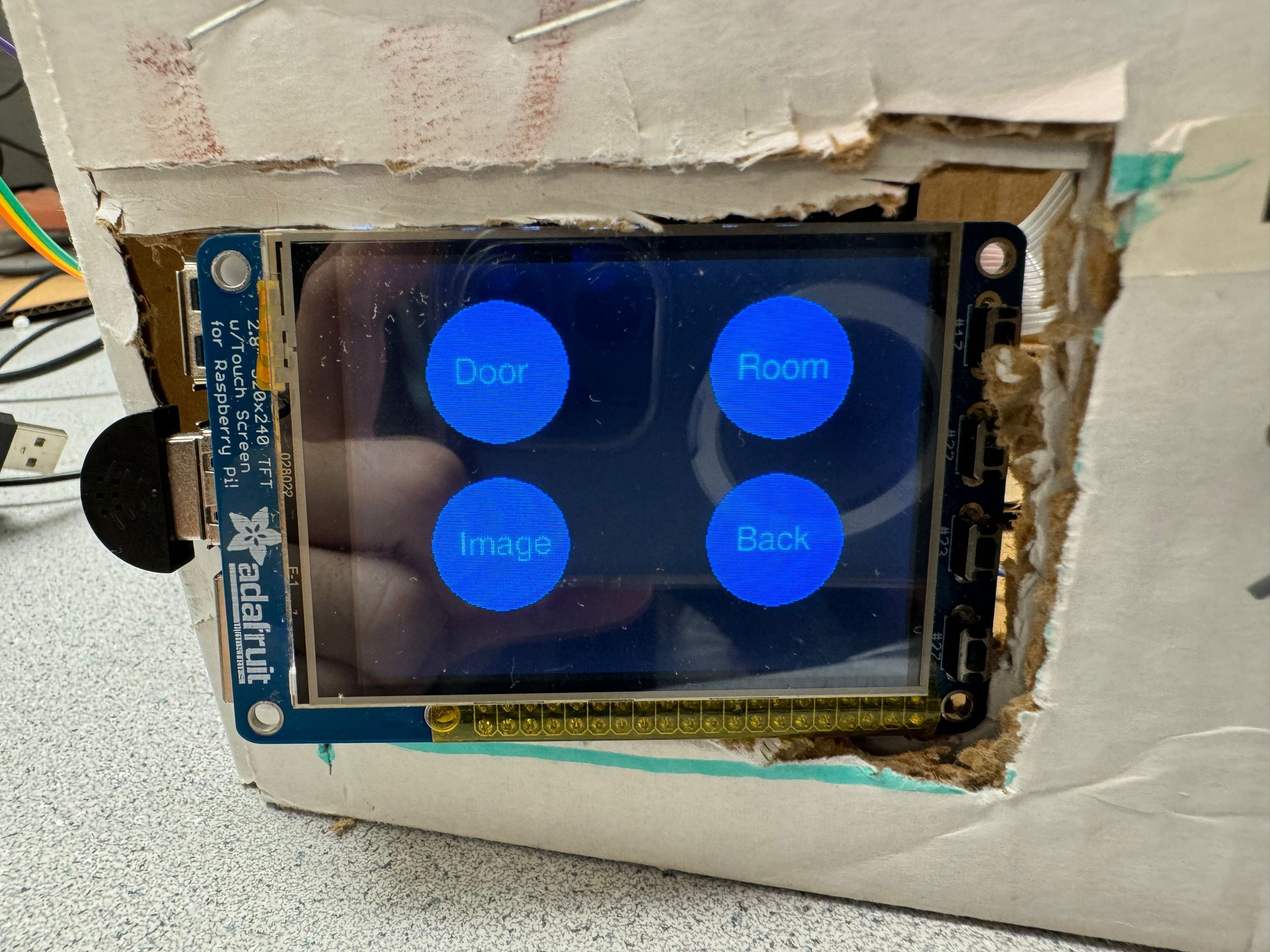

The control system consists of a user interface and a finite state machine. The user interface switching logic is as Figure 1 shows. As Figure 2-4 shows, we have three layers of interface, main menu, appliance menu, and log. On the main menu, we also show the current weather, date-time, room weather, and the RH. The finite state machine synthesizes all the signals from the sensor or other input, switches between different menus, and controls the appliance.

Appliance control

There are two appliance modes: manual and automatic. The manual mode allows the user to control all the appliances through the menu on the touch screen or by voice captured by the microphone. Also, we designed the automatic control of the appliance. The humidifier and the fan are driven based on the data returned from the DHT sensor. When the RH is high, the humidifier is turned off and the fan is turned on. At low RH, it does the converse. Each room and the door’s lights will shine when the motion sensor detects a human passed by. The sunrise and sunset data drive the curtain. When the time is close to the pre-calculated sunrise or sunset time, the curtain will be open or closed, respectively.

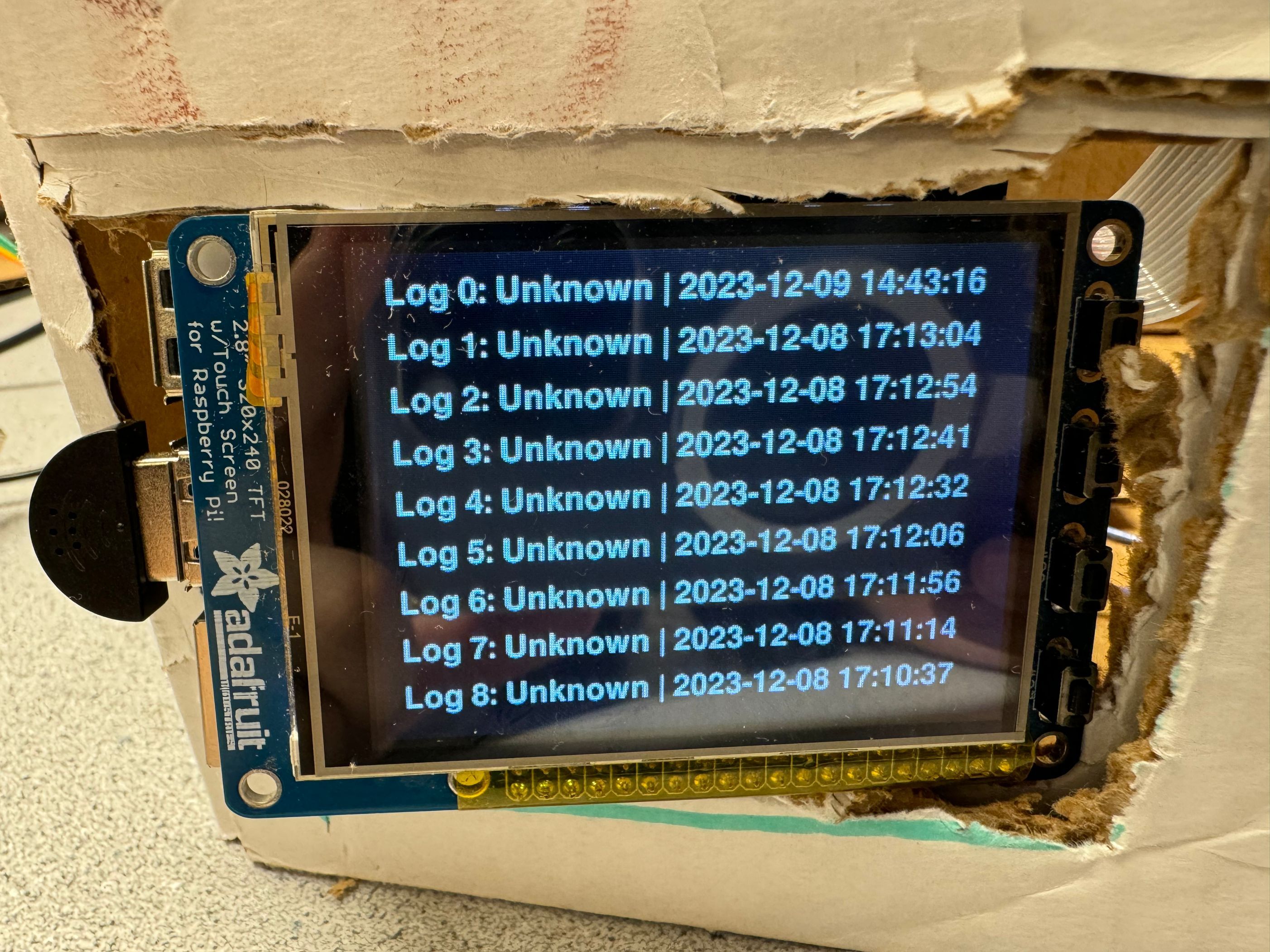

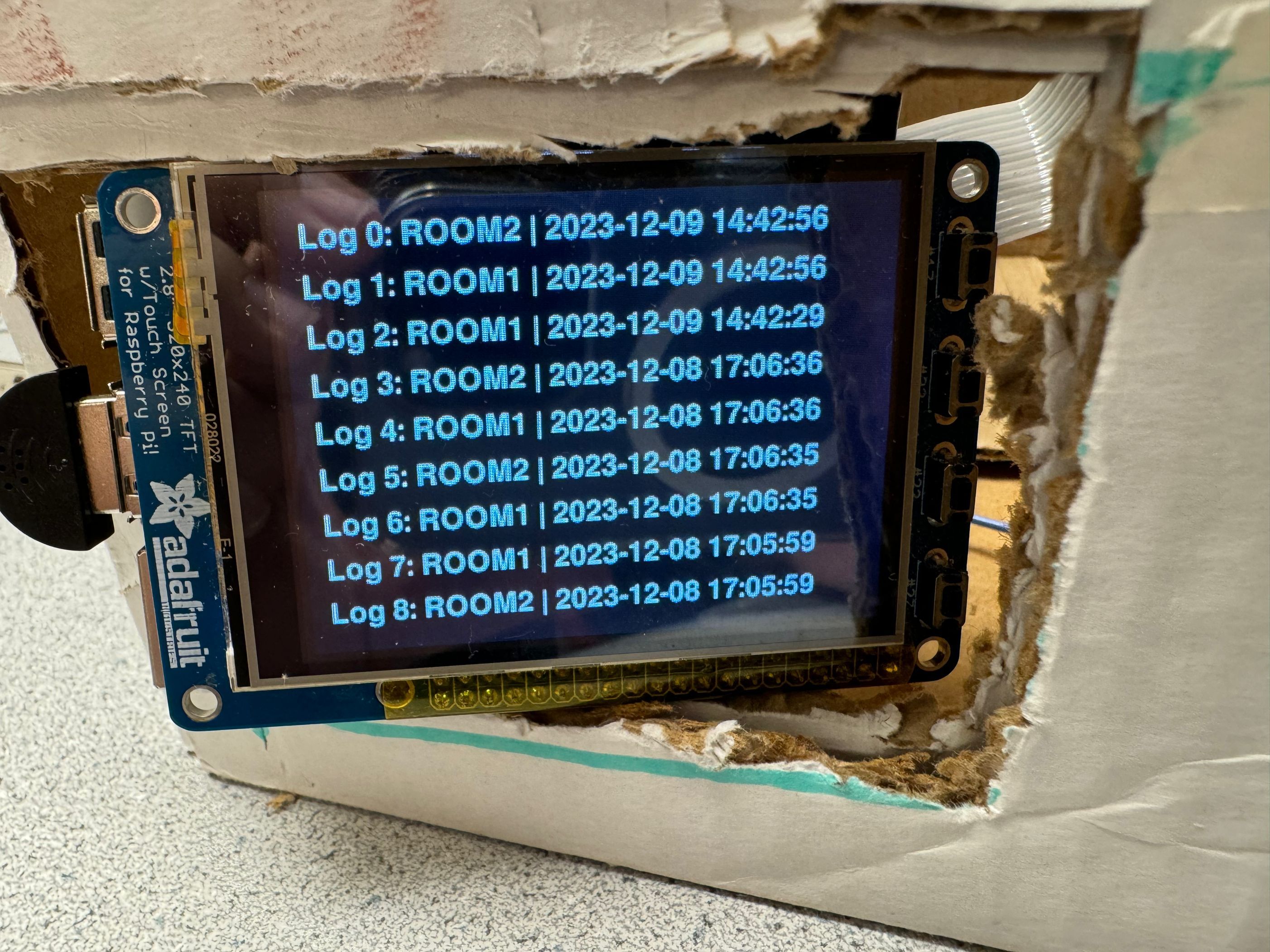

Log System

We also use Sqlite to design and display our log system. In Room 1 and Room 2, when there is people pass by, not only the light be on, but the Raspberry Pi will also record the location and time of the event will be recorded and stored in the database. For the door, when the motion sensor detects people passing by, it will drive the camera to take a picture and call the face recognition function. If the face recognition shows there is no human face in the picture, it will delete the picture and ignore the signal. If there is a human face, whether people have records or unknown people, it will record the name and the date-time, store them in the database, and store the picture in the file system. For convenience, we store the two kinds of logs into one single database and set the non-exist column to be ‘None’.

Voice Control and Response

The voice control system has two entrances: the button on the main menu and a real button on the side of piTFT. Either one clicked, the system will start a recording thread to record the sound through a microphone. When clicked again, the recording stops and the Pi will start to transform the speech to text and analyze the text. If there are pre-defined keywords in the text, the control program will execute corresponding commands such as control the appliance, broadcast current time, weather, room temperature, and RH. Also, it shuts down the program if there is the word ‘terminate’ in it. The voice play is achieved through FIFO. When the main program starts, we run another audio-play program that transforms the text from the FIFO into speech and plays it through the speaker.

Face recognition

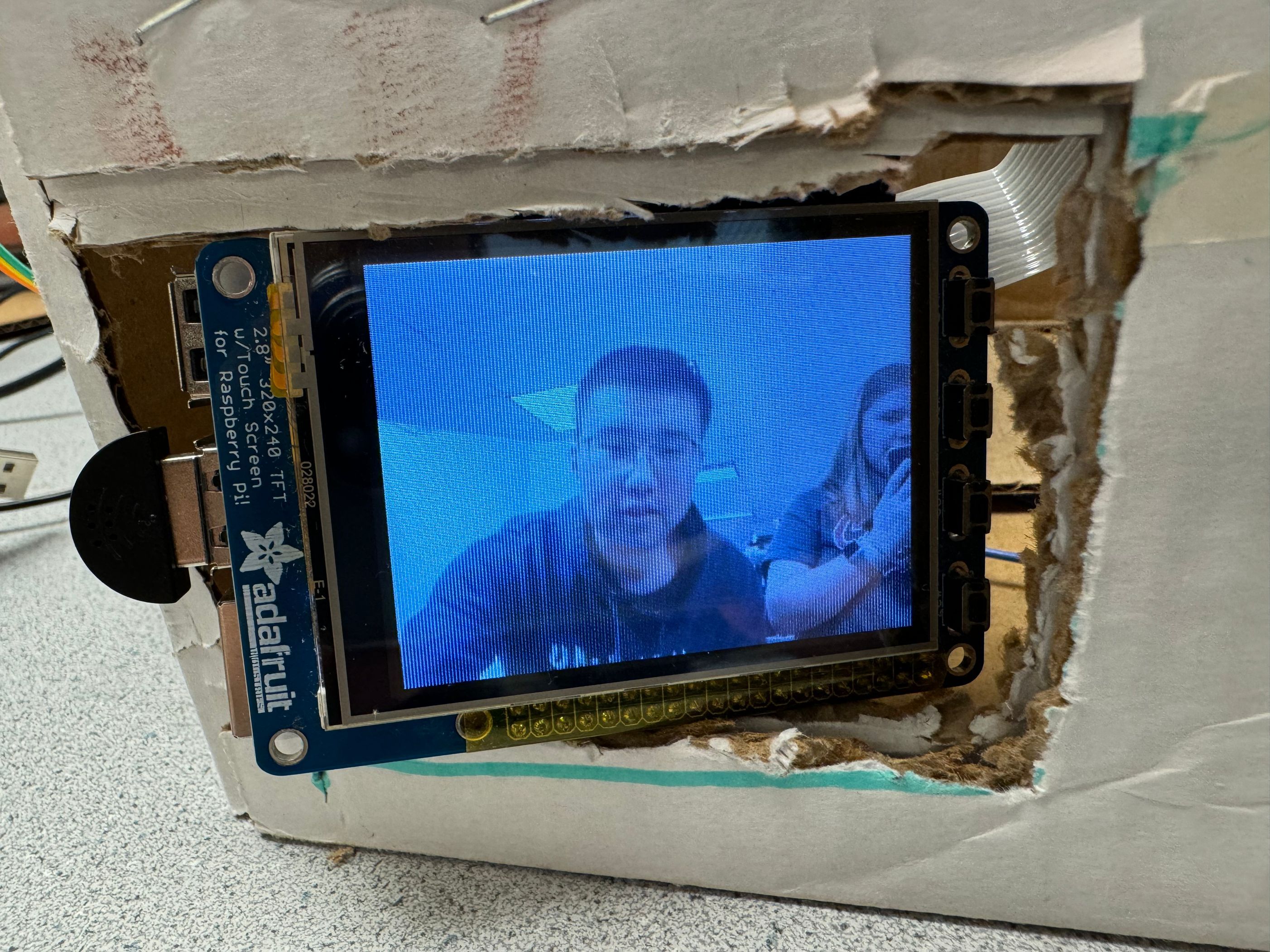

We designed a facial recognition program using OpenCV library for face detection and recognition. The ‘face_recog’ class initializes a face recognition system by loading a pre-trained face recognition model from the 'trained_faces.yml' file. It also uses a Haar cascade classifier for frontal face detection. The recognize method captures real-time video using the Pi camera. It reads frames, converts them to grayscale, and detects faces using the pre-trained face detection model. Detected faces are enclosed with rectangles on the video frame. The script determines the confidence level of detected faces and assigns names based on recognized IDs. It counts the occurrences of recognized individuals and unidentified faces. If the confidence level is below 100, it identifies the face with the corresponding name. Otherwise, it marks the face as 'Unknown'. The system monitors the occurrences of recognized and unrecognized faces. If there are no faces detected or if the faces don't meet a threshold number within ten frames, it returns 'No Face'. Otherwise, it saves the detected face images to a specified directory, labeling them with incremental indices. If the count of 'Unknown' faces exceeds the defined threshold, it stores the image and returns 'Unknown' with the image path. Similarly, if the count of a recognized individual surpasses the threshold, it saves the image and returns name with the image path. If neither condition is met, it returns 'No Face'.

In the main code, when the front motion sensor detects motion, it triggers the camera to capture a photo and performs facial recognition. The recognition results are saved in the log along with the captured photo.

Drawings and Photos

Testing

Hardware Testing

In terms of hardware testing, we commenced with unit testing followed by integration testing. Given the integration of numerous hardware peripherals in this project, each of them required individual test. Initially, we wired all connections as per specifications and developed separate test programs for each peripheral. Only after completing testing for each individual peripheral did we integrate them into one program for comprehensive testing.

Two complex hardware components in the testing process are the servo motors and the PIR motion sensors. Calibrating the servo motors and designing the curtain control mechanics took a lot of time. On the other hand, the calibration of the PIR motion sensor was very complex and required a lot of time to fine-tune the two potentiometers for optimal detection. Despite our adjustments, the motion sensors had difficulty detecting the motion of small objects. For example, placing two motion sensors in separate small rooms and waving a hand in front of the sensors was often not detected. This problem may stem from inherent limitations of the PIR hardware that make it difficult to detect infrared signals emitted by small objects such as hands.

Another obstacle encountered was the use of NPN transistors to control fans and humidifiers. Initially, due to the lack of specific transistor parameters, we spent a lot of time testing the transistors using oscilloscopes, multimeters, and other equipment. Eventually, after extensive testing, we were able to confirm that NPN transistors were suitable for controlling the devices.

Software Testing

To test our program, we first separately test all the parts to ensure that they function correctly, including the appliance, the voice control, the touch UI, the log system, and the automatic control logic. Then we synthesize all the parts together and run the main program to test if they conflict with each other.

In the testing process, most of the parts coordinate with each other well. However, as time goes by, the system’s latency increases significantly. According to our analysis, the problem may result from the two many threads and processes running in the background, which is a heavy load for Raspberry Pi 4B’s low-frequency, 4-core CPU. To solve this question, there are two ways. One is to replace the system with another platform that has better performance. Or we can reduce the number of threads we use and optimize each thread such that reduces their computation cost.

Results and Conclusions

The Smart Home Manager project is an application of all that we have learned and an exploration of smart system design. This work encompasses a variety of elements that integrate hardware components and software functionality to enable a multifaceted home automation system. Throughout the project, our primary focus was on developing a robust system capable of voice-controlled operations, facial recognition, and diverse environmental control mechanisms.

Our projects begin with meticulous hardware design and testing. Since the project included many hardware peripherals, it was a challenge to successfully complete the design of all the hardware peripheral circuits. We maximized the functionality of all the peripherals and integrated them together. Furthermore, the implementation of software features, such as the use of facial recognition, voice control, and the design of the UI, is also a challenge. We ended up successfully designing an intuitive and interactive interface and adding a log system to effectively manage people's home environment.

In conclusion, the Smart Home Manager project contains a comprehensive exploration of hardware and software integration and embedded Linux operating system technology.Our project successfully implemented all anticipated functionalities and incorporated additional features to further enhance the overall system's usability. The system works well overall. However, due to limitations in Raspberry Pi's performance, slight lags may occur during system operation. Additionally, there might be accuracy issues in facial recognition when the background of the photo is overly complex due to limitations within the OpenCV model. Nevertheless, overall, our project functions properly and meets the designed expectations. In the process of doing this project, we also encountered a lot of software and hardware difficulties, but in the end we successfully solved them. This not only let us consolidate the knowledge we learned in class, but also let us further improve our ability.

Future Work

In anticipation of future advancements, our foremost objective is to elevate the program's performance by implementing various strategies. Potential approaches include optimizing the multi-threading, refining the finite state machine, and reducing the frequency of API calls. Also, the current prototype is not a completely functioning voice assistant because it needs to be activated by the button. To solve this, we may add a voice activation service. Furthermore, to make the smart home literally smart, we can integrate Chat-GPT API into the program so that it can give responses based on the context.

Work Distribution

Zidong Huang

zh445@cornell.edu

Focused on the overall software design.

Yuqiang Ge

yg585@cornell.edu

Focused on hardware design and the implementation of face recognition.

Parts List

- Raspberry Pi - Provided in lab

- PiTFT - Provided in lab

- Raspberry Pi Camera V2 $25.00

- Ultrasonic Humidfier $8.00

- Pulleys $5.00

- DHT11 $3.00

- Fan $3.00

- Servo $9.00

- USB Microphon $8.00

- PIR Motion Sensor × 3 $8.00

- Speaker - Provided in lab

- LEDs, Resistors and Wires - Provided in lab

Total: $69.00

References

[2]Pigpio Library

[3]R-Pi GPIO Document

[4]DHT11 Document

[5]Servo Document

[6]PIR Motion Sensor Document

[7]Weather API

[8]Pygame Document

[9]Audio

[10]Face Recognition with OpenCV

[11]Augmented Reality Assistant Project

Code Appendix

#main.py import os import RPi.GPIO as GPIO import time import pygame from pygame.locals import * from audiorecorder import * import speech_recognition as sr from helper import * from helper import GPIO_helper from datetime import datetime from log import logDB from facerecog import face_recog # constant HUMID_HIGH = 65 HUMID_LOW = 30 # instance of assistant classes audio = Recorder() recognizer = sr.Recognizer() recognizer.dynamic_energy_threshold = True GP = GPIO_helper() log = logDB("LOG.db") face = face_recog() def audio_to_text(filename): speech = sr.AudioFile(filename) with speech as source: recognizer.adjust_for_ambient_noise(source) audio = recognizer.record(source) text = recognizer.recognize_google(audio,language = 'en-US')#,key = "AIzaSyBUHRjjkxUx-sj1ZC5jcOdwPBOXHscZW3I") return text class GUI(): layer_index = 1 recording = 0 # Initialize pygame and the piTFT display os.putenv('SDL_VIDEODRIVER', 'fbcon') # Display on piTFT os.putenv('SDL_FBDEV', '/dev/fb1') os.putenv('SDL_MOUSEDRV', 'TSLIB') # Track mouse clicks on piTFT os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen') pygame.init() pygame.mouse.set_visible(False) screen = pygame.display.set_mode((320, 240)) # path for image log image_folder = '/home/pi/proj/photohistory' image_files = [file for file in os.listdir(image_folder) if file.startswith('image_') and file.endswith('.jpg')] image_files.sort() current_index = len(image_files) - 1 # Define some colors and fonts BLACK = (0, 0, 0) WHITE = (255, 255, 255) RED = (255, 0, 0) GREEN = (0, 255, 0) BLUE = (0, 0, 255) ICE = (185,205,246) ORANGE = (255,165,0) PLANT = (169,207,83) BROWN = (204,102,51) FIRE = (226,88,34) WATER = (102,255,230) YELLOW = (255,255,0) VOICECOLOR = (0,0,255) FONT = pygame.font.SysFont('Arial', 20) width, height = 320,240 # Define some buttons button1 = pygame.Rect(210, 170, 80, 60) # First layer button button2 = pygame.Rect(320 // 2 - 35, 240 - 50, 70, 40) # Second layer button voice_button = pygame.Rect(30,170,80,60) log_button = pygame.Rect(120,170,80,60) button_positions = [(width // 4, height//4), (3 * width // 4, height //4), (width // 4, 4* height//6), (3* width // 4, 4* height //6)] circle_rect = [] for position in button_positions: rect = pygame.draw.circle(screen, GREEN, position, 40) circle_rect.append(rect) # Define some variables to keep track of layers and appliance states current_layer = 1 is_new_to_image = 0 light_on = False curtain_open = False manual_humid = False humidifier_on = False manual_humid = False fan_on = False face_id = 0 manual_control = False room1counter = 0 room2counter = 1 lastroom = 0 curroom = 0 temperature = 0 humidity = 0 # acquire some variables GP.dht_init() temperature, humidity = GP.humitemp() start_time = time.time() weather = acquire_Ithaca_weather() sunrise_today = sunrise() sunset_today = sunset() door_counter = 0 def __init__(self): GPIO.setmode(GPIO.BCM) GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP) GPIO.setup(22, GPIO.IN, pull_up_down=GPIO.PUD_UP) return # Define a function to draw the first layer def draw_first_layer(self): if(int(time.time()-self.start_time)%120 == 0): self.weather = acquire_Ithaca_weather() screen = self.screen FONT = self.FONT # Clear the screen screen.fill(self.BLACK) # Draw the button to go to the second layer pygame.draw.rect(self.screen, self.GREEN, self.button1) pygame.draw.rect(self.screen, self.VOICECOLOR, self.voice_button) pygame.draw.rect(self.screen, self.RED, self.log_button) screen.blit(FONT.render('Log', True, self.WHITE), (140, 190)) screen.blit(FONT.render('Voice', True, self.WHITE), (45, 190)) screen.blit(FONT.render('Next', True, self.WHITE), (230, 190)) # Get the current time, temperature and RH now = datetime.now() current_time = now.strftime("%Y-%m-%d %H:%M:%S") humidity, temperature = self.humidity, self.temperature # Display the time, temperature and RH weather_str = 'Temp: ' + str(self.weather[0])+' C, '+str(self.weather[1]) if self.weather[0] <10 : screen.blit(FONT.render(weather_str, True, self.ICE), (30, 20)) else : screen.blit(FONT.render(weather_str, True, self.ORANGE), (30, 20)) screen.blit(FONT.render('Date&Time: ' + current_time, True, self.YELLOW), (20, 57)) if temperature < 25 and temperature > 15: screen.blit(FONT.render('Room Temp: {:.1f} C'.format(temperature), True, self.ORANGE), (30, 95)) elif temperature < 0: screen.blit(FONT.render('Room Temp: {:.1f} C'.format(temperature), True, self.ICE), (30, 95)) else : screen.blit(FONT.render('Room Temp: {:.1f} C'.format(temperature), True, self.FIRE), (30, 95)) if humidity > HUMID_HIGH : screen.blit(FONT.render('RH: {:.1f} %'.format(humidity), True, self.WATER), (30, 131)) elif humidity < HUMID_LOW: screen.blit(FONT.render('RH: {:.1f} %'.format(humidity), True, self.BROWN), (30, 131)) else: screen.blit(FONT.render('RH: {:.1f} %'.format(humidity), True, self.PLANT), (30, 131)) # Update the display pygame.display.flip() # Define a function to draw the second layer def draw_second_layer(self): width, height = 320, 240 screen = self.screen FONT = self.FONT screen.fill(self.BLACK) # Display round buttons for curtain, humidifier, light, and voice control button_radius = 40 for position in self.button_positions: pygame.draw.circle(self.screen, self.GREEN, position, button_radius) screen.blit(FONT.render('Curtain', True, self.WHITE), (width // 4-30, height//4-10)) screen.blit(FONT.render('Light', True, self.WHITE), (3 * width // 4 -20, height //4-10)) screen.blit(FONT.render('Humid', True, self.WHITE), (width // 4 -25, 4* height//6-10)) screen.blit(FONT.render('Fan', True, self.WHITE), (3* width // 4 -23, 4* height //6-10)) # Display 'Exit' button pygame.draw.rect(self.screen, self.RED, (width // 2 - 35, height - 50, 70, 40)) exit_button_text = FONT.render('Exit', True, self.WHITE) screen.blit(exit_button_text, (width // 2 - exit_button_text.get_width() // 2, height-40)) pygame.display.flip() # Define a function to draw the third layer def draw_third_layer(self): width, height = 320, 240 screen = self.screen FONT = self.FONT screen.fill(self.BLACK) # Display round buttons for curtain, humidifier, light, and voice control button_radius = 40 for position in self.button_positions: pygame.draw.circle(self.screen, self.BLUE, position, button_radius) screen.blit(FONT.render('Door', True, self.WHITE), (width // 4-25, height//4-10)) screen.blit(FONT.render('Room', True, self.WHITE), (3 * width // 4 -25, height //4-10)) screen.blit(FONT.render('Image', True, self.WHITE), (width // 4 -25, 4* height//6-10)) screen.blit(FONT.render('Back', True, self.WHITE), (3* width // 4 -23, 4* height //6-10)) pygame.display.flip() # Define a function to show the images on the screen def show_images(self): screen = self.screen self.image_files = [file for file in os.listdir(self.image_folder) if file.startswith('image_') and file.endswith('.jpg')] self.image_files = sorted(self.image_files, key=extract_number) if self.is_new_to_image == 0: self.current_index = len(self.image_files) - 1 self.image_path = os.path.join(self.image_folder, self.image_files[self.current_index]) image = pygame.image.load(self.image_path) image = pygame.transform.scale(image, (320, 240)) image_rect = image.get_rect() screen_rect = screen.get_rect() image_rect.center = screen_rect.center screen.blit(image, image_rect) pygame.display.flip() # Define a function to draw the logt layer def draw_logs(self): screen = self.screen screen.fill(self.BLACK) # Clear the screen font = pygame.font.Font(None, 24) # Choose a font and size start_x = 10 start_y = 10 line_height = 25 if self.layer_index == 5: logs = log.list_recent_entries_by_name_or_location_with_limit(9,location="DOOR") for count, cur in enumerate(logs): # Select the specified columns from the log entry selected_columns = [cur[index] for index in [2,3]] # Create the display string with the selected columns display_string = f'Log {count}: ' + ' | '.join(str(item) for item in selected_columns) # Render the text text = font.render(display_string, True, self.WHITE) screen.blit(text, (start_x, start_y + count * line_height)) # Update the display pygame.display.flip() elif self.layer_index == 6: logs = log.list_recent_entries_by_name_or_location_with_limit(9,name="NONAME") for count, cur in enumerate(logs): # Select the specified columns from the log entry selected_columns = [cur[index] for index in [1,3]] # Create the display string with the selected columns display_string = f'Log {count}: ' + ' | '.join(str(item) for item in selected_columns) # Render the text text = font.render(display_string, True, self.WHITE) screen.blit(text, (start_x, start_y + count * line_height)) # Update the display pygame.display.flip() # mainloop displaying all the GUI and conduct the finite state machine def mainloop(self): code_run = True click_counter = 0 time_limit = 1200 # print(self.humidity) while code_run: #exit button if not GPIO.input(17): GPIO.cleanup() pygame.quit() exit() # sunrise set time update and check if(int(time.time()-self.start_time)%3600 == 0): self.sunrise_today = sunrise() self.sunset_today = sunset() current_time = datetime.now() time_difference1 = current_time - datetime.strptime(self.sunrise_today,'%H:%M:%S') time_difference2 = current_time - datetime.strptime(self.sunset_today,'%H:%M:%S') total_seconds1 = time_difference1.total_seconds() total_seconds2 = time_difference2.total_seconds() if abs(total_seconds1) < 1: if not self.curtain_open: GP.curtain_open() self.curtain_open = True elif abs(total_seconds2) < 1: if self.curtain_open: GP.curtain_close() self.curtain_open = False # time out if time.time() -self.start_time > time_limit: print("timeout") code_run = False # three motion sensors if GP.motiondect1(): # print("motion detected") # print("Layer Index :",self.layer_index) name, filepath = face.recognize(2) if not name == "No Face": print(name+" detected") # if name == "Unknown": # speak("Unknown person at door") log.insert("DOOR",name,filepath) GP.light3_duration() if GP.motiondect2(): self.room1counter = (self.room1counter+1) % 1000 if self.room1counter == 0: self.curroom = 1 if not self.curroom == self.lastroom: print("ROOM1") GP.light1last() log.insert("ROOM1","NONAME","None") self.lastroom = 1 if GP.motiondect3(): self.room2counter = (self.room2counter+1) % 1000 if self.room2counter == 0: self.curroom = 2 if not self.curroom == self.lastroom: print("ROOM2") GP.light2last() log.insert("ROOM2","NONAME","None") self.lastroom = 2 # button for face recognize if not GPIO.input(22): name, filepath = face.recognize(2) if not name == "No Face": print(name+" detected") # if name == "Unknown": # speak("Unknown person at door") log.insert("DOOR",name,filepath) GP.light3_duration() speak(name + " is knocking at the door") # humidifier and fan control self.temperature, self.humidity = GP.humitemp() if self.humidity < HUMID_LOW: # and not self.manual_control: if not self.humidifier_on: GP.humid_on() self.humidifier_on = True if self.fan_on: GP.fan_off() self.fan_on = False if self.humidity > HUMID_HIGH:# and not self.manual_control: if self.humidifier_on: GP.humid_off() self.humidifier_on = False if not self.fan_on: GP.fan_on() self.fan_on = True # draw layers if self.layer_index == 1: self.draw_first_layer() elif self.layer_index == 2: self.draw_second_layer() elif self.layer_index == 3: self.draw_third_layer() elif self.layer_index == 4: self.show_images() elif self.layer_index == 5 or self.layer_index == 6: self.draw_logs() # click detection and state switch pos = 0,0 for event in pygame.event.get(): if(event.type == MOUSEBUTTONDOWN): pos = pygame.mouse.get_pos() pygame.event.clear([pygame.MOUSEBUTTONDOWN]) if self.layer_index == 1: print(pos) if self.button1.collidepoint(pos): self.layer_index = 2 elif self.voice_button.collidepoint(pos): if self.recording == 0 : audio.start() self.recording = 1 print("recording start") self.VOICECOLOR = self.YELLOW elif self.recording == 1 : self.VOICECOLOR = self.BLUE audio.stop() audio.save("recording") self.recording = 0 print("recording stop") command = "NONE" try: command = audio_to_text("recording.wav") print(command) except: print("fail to recognize") speak("fail to recognize") if "curtain" in command : if not self.curtain_open: GP.curtain_open() self.curtain_open = True else : GP.curtain_close() self.curtain_open = False elif "light" in command : if not self.light_on: GP.light_on(1) self.light_on = True else : GP.light_off(1) self.light_on = False elif ("humid" in command or "humidifier" in command ): if not self.humidifier_on: GP.humid_on() self.humidifier_on = True self.manual_control = True else : GP.humid_off() self.humidifier_on = False if(not self.humidifier_on and not self.fan_on): self.manual_control = False elif "fan" in command or "fun" in command: if not self.fan_on: GP.fan_on() self.fan_on = True self.manual_control = True else : GP.fan_off() self.fan_on = False if(not self.humidifier_on and not self.fan_on): self.manual_control = False elif ("weather" in command) or (("temperature" in command) and not ("room" in command)): temp, cond = acquire_Ithaca_weather() speak("Current temperature:" + str(temp) + "Celsius degree, Condition: "+ str(cond)) elif ("room temperature" in command) or ("humidity" in command): humid, roomtemp = self.humidity, self.temperature speak("Room temperature: "+str(roomtemp)+ "Celsius degree, Relative Humidity: "+str(humid)+"percent") elif ("date" in command) or ("time" in command): now = datetime.now() datestring = "it is " + str(now.strftime("%A, %B %d, %Y, %I:%M %p")) print(datestring) speak(datestring) elif "image" in command: self.layer_index = 4 self.is_new_to_image = 0 elif "door" in command: self.layer_index = 5 elif "room" in command: self.layer_index = 6 elif command == "terminate": GPIO.cleanup() elif self.log_button.collidepoint(pos): self.layer_index = 3 elif self.layer_index == 2: if self.button2.collidepoint(pos): self.layer_index = 1 if self.circle_rect[0].collidepoint(pos): if not self.curtain_open: GP.curtain_open() self.curtain_open = True else: GP.curtain_close() self.curtain_open = False if self.circle_rect[1].collidepoint(pos): if not self.light_on: GP.light_on(1) self.light_on = True else: GP.light_off(1) self.light_on = False if self.circle_rect[2].collidepoint(pos): if not self.humidifier_on: GP.humid_on() self.humidifier_on = True self.manual_control = True else: GP.humid_off() self.humidifier_on = False if(not self.humidifier_on and not self.fan_on): self.manual_control = False if self.circle_rect[3].collidepoint(pos): if not self.fan_on: GP.fan_on() self.fan_on = True self.manual_control = True else: GP.fan_off() self.fan_on = False if(not self.humidifier_on and not self.fan_on): self.manual_control = False elif self.layer_index == 3: if self.circle_rect[0].collidepoint(pos): self.layer_index = 5 if self.circle_rect[1].collidepoint(pos): self.layer_index = 6 if self.circle_rect[2].collidepoint(pos): self.layer_index = 4 self.is_new_to_image = 0 if self.circle_rect[3].collidepoint(pos): self.layer_index = 1 elif self.layer_index == 4: mouse_x, mouse_y = event.pos if 100 < mouse_x < 220: self.layer_index = 3 elif mouse_x < 100: self.is_new_to_image = 1 self.current_index = (self.current_index - 1) % len(self.image_files) elif mouse_x > 220: self.is_new_to_image = 1 self.current_index = (self.current_index + 1) % len(self.image_files) elif self.layer_index == 5 or self.layer_index ==6: click_counter = (click_counter+1)%2 if(click_counter == 0): self.layer_index = 3 menu = GUI() try: menu.mainloop() except KeyboardInterrupt: GPIO.cleanup() pygame.quit() exit()

#helper.py import requests import os import threading import time import RPi.GPIO as GPIO import board import digitalio from astral import LocationInfo from datetime import datetime from astral.sun import sun import adafruit_dht import re DURATION = 5 # a class that controls all GPIOs class GPIO_helper(): GPIO.setmode(GPIO.BCM) servo1_pin = 6 GPIO.setup(servo1_pin, GPIO.OUT) pwm1 = GPIO.PWM(servo1_pin, 50) pwm1.start(0) fan_pin = 4 GPIO.setup(fan_pin, GPIO.OUT) GPIO.output(fan_pin, GPIO.LOW) humid_pin = 20 GPIO.setup(humid_pin, GPIO.OUT) GPIO.output(humid_pin, GPIO.LOW) pir_sensor1 = digitalio.DigitalInOut(board.D23) pir_sensor1.direction = digitalio.Direction.INPUT pir_sensor2 = digitalio.DigitalInOut(board.D12) pir_sensor2.direction = digitalio.Direction.INPUT pir_sensor3 = digitalio.DigitalInOut(board.D16) pir_sensor3.direction = digitalio.Direction.INPUT led_pin_1 = 19 led_pin_2 = 21 led_pin_3 = 5 GPIO.setup(led_pin_1, GPIO.OUT) GPIO.setup(led_pin_2, GPIO.OUT) GPIO.setup(led_pin_3, GPIO.OUT) dhtDevice = dht_start() temperature, humidity = 0, 0 dht_lock = threading.Lock() def __init__(self): return def curtain_open(self): threading._start_new_thread(self.curtain_open_helper,()) def curtain_close_helper(self): try: self.pwm1.ChangeDutyCycle(8.5) time.sleep(1) self.pwm1.ChangeDutyCycle(0) print("curtain closed") except KeyboardInterrupt: self.pwm1.stop() self.GPIO.cleanup() def curtain_close(self): threading._start_new_thread(self.curtain_close_helper,()) def curtain_open_helper(self): try: self.pwm1.ChangeDutyCycle(6.5) time.sleep(1.1) self.pwm1.ChangeDutyCycle(0) print("curtain open") except KeyboardInterrupt: self.pwm1.stop() self.GPIO.cleanup() def humid_on(self): time.sleep(0.3) threading._start_new_thread(self.humid_on_helper,()) def humid_on_helper(self): print("humid on") GPIO.output(self.humid_pin, GPIO.HIGH) time.sleep(0.3) GPIO.output(self.humid_pin, GPIO.LOW) time.sleep(0.3) GPIO.output(self.humid_pin, GPIO.HIGH) time.sleep(0.3) GPIO.output(self.humid_pin, GPIO.LOW) def humid_off(self): time.sleep(0.3) threading._start_new_thread(self.humid_off_helper,()) def humid_off_helper(self): print("humid off") GPIO.output(self.humid_pin, GPIO.HIGH) time.sleep(0.3) GPIO.output(self.humid_pin, GPIO.LOW) def light1last(self): threading._start_new_thread(self.light1_duration,()) def light2last(self): threading._start_new_thread(self.light2_duration,()) def light1_duration(self): self.light_on(1) time.sleep(DURATION) self.light_off(1) def light2_duration(self): self.light_on(2) time.sleep(DURATION) self.light_off(2) def light3_duration(self): self.light_on(3) time.sleep(DURATION) self.light_off(3) def light_on(self,index): if index == 1: # print("light1 on") GPIO.output(self.led_pin_1, GPIO.HIGH) elif index == 2: # print("light2 on") GPIO.output(self.led_pin_2, GPIO.HIGH) elif index ==3 : GPIO.output(self.led_pin_3, GPIO.HIGH) def light_off(self,index): if index == 1: # print("light1 off") GPIO.output(self.led_pin_1, GPIO.LOW) elif index == 2: # print("light2 off") GPIO.output(self.led_pin_2, GPIO.LOW) elif index ==3 : GPIO.output(self.led_pin_3, GPIO.LOW) def fan_on(self): print("fan on") GPIO.output(self.fan_pin, GPIO.HIGH) def fan_off(self): print("fan off") GPIO.output(self.fan_pin, GPIO.LOW) def motiondect1(self): return self.pir_sensor1.value def motiondect2(self): return self.pir_sensor2.value def motiondect3(self): return self.pir_sensor3.value def humitemp(self): #self.dht_lock.acquire() a,b = self.temperature, self.humidity #self.dht_lock.release() if a == None : a = 999 if b == None : b = 999 return a,b def dht_init(self): threading._start_new_thread(self.dht_helper,()) def dht_helper(self): while True: try: # Print the values to the serial port #self.dht_lock.acquire() self.temperature = self.dhtDevice.temperature self.humidity = self.dhtDevice.humidity #self.dht_lock.release() time.sleep(0.1) except RuntimeError as error: # Errors happen fairly often, DHT's are hard to read, just keep going time.sleep(0.1) continue except Exception as error: self.dhtDevice.exit() raise error def speak(text): print(text) os.system("echo "+text+" > speechfifo") # request current weahter from api def get_weather(api_key, lat, lon): url = f'http://api.weatherapi.com/v1/current.json?key={api_key}&q={lat},{lon}' response = requests.get(url) if response.status_code == 200: weather_data = response.json() return weather_data else: print(f"Error: Unable to fetch weather data. Status code: {response.status_code}") return None def acquire_Ithaca_weather(): api_key = 'YOUR API KEY' latitude, longitude = 42.443962, -76.501884 weather_data = get_weather(api_key, latitude, longitude) return weather_data['current']['temp_c'], weather_data['current']['condition']['text'] def sunrise(): city = LocationInfo("Ithaca", "US", "US/Eastern", 42.443962, -76.501884) s = sun(city.observer, date=datetime.now(), tzinfo=city.timezone) return s['sunrise'].strftime('%H:%M:%S') def sunset(): city = LocationInfo("Ithaca", "US", "US/Eastern", 42.443962, -76.501884) s = sun(city.observer, date=datetime.now(), tzinfo=city.timezone) return s['sunset'].strftime('%H:%M:%S') def extract_number(filename): parts = re.findall(r'\d+', filename) return int(parts[0]) if parts else None def dht_start(): while True: try: sensor = adafruit_dht.DHT11(board.D26) return sensor except Exception as e: print(f"Failed to initialize DHT11 sensor: {e}. Retrying...") time.sleep(0.5) # Wait for 2 seconds before retrying

#audiorecorder.py import pyaudio import time import threading import wave class Recorder: def __init__(self, chunk=4*1024, channels=1, rate=44100): self.CHUNK = chunk self.FORMAT = pyaudio.paInt16 self.CHANNELS = channels self.RATE = rate self._running = True self._frames = [] # start a thread to record def start(self): threading._start_new_thread(self.__recording,()) # record def __recording(self): self._running = True self._frames = [] p = pyaudio.PyAudio() stream = p.open(format=self.FORMAT, channels=self.CHANNELS, rate=self.RATE, input=True, frames_per_buffer=self.CHUNK) while self._running: data = stream.read(self.CHUNK) self._frames.append(data) stream.stop_stream() stream.close() p.terminate() # stop recording def stop(self): self._running = False # save the files def save(self, filename = "voice"): p = pyaudio.PyAudio() if not filename.endswith(".wav"): filename = filename + ".wav" wf = wave.open(filename, 'wb') wf.setnchannels(self.CHANNELS) wf.setsampwidth(p.get_sample_size(self.FORMAT)) wf.setframerate(self.RATE) wf.writeframes(b''.join(self._frames)) wf.close() print("Saved")

#playsound.py import os from gtts import gTTS import time fifo_path = 'speechfifo' # read command from fifo and play it while True: with open(fifo_path, 'r') as fifo_file: # read from fifo data = fifo_file.read() print("Spoke: "+data) if(data == 'exit'): exit() tts = gTTS(text=data, lang='en') tts.save("speech.mp3") os.system("nohup mpg123 speech.mp3 > playerlog.txt 2>&1 &") time.sleep(1)

#log.py import sqlite3 from datetime import datetime # a class of database that stores two kinds of log together class logDB: def __init__(self, db_name): self.conn = sqlite3.connect(db_name) self.cursor = self.conn.cursor() self.create_table() def create_table(self): self.cursor.execute(''' CREATE TABLE IF NOT EXISTS rooms ( id INTEGER PRIMARY KEY, location TEXT NOT NULL, name TEXT NOT NULL, time TEXT NOT NULL, photo_file_name TEXT NOT NULL ) ''') self.conn.commit() def insert(self, location, name, photo_file_name): if not photo_file_name: raise ValueError("photo_file_name cannot be null") current_time = datetime.now().strftime('%Y-%m-%d %H:%M:%S') self.cursor.execute(''' INSERT INTO rooms (location, name, time, photo_file_name) VALUES (?, ?, ?, ?) ''', (location, name, current_time, photo_file_name)) self.conn.commit() def remove(self, entry_id): self.cursor.execute('DELETE FROM rooms WHERE id = ?', (entry_id,)) self.conn.commit() def search_on_room(self, location): self.cursor.execute('SELECT * FROM rooms WHERE location = ?', (location,)) return self.cursor.fetchall() def search_on_name(self, name): self.cursor.execute('SELECT * FROM rooms WHERE name = ?', (name,)) return self.cursor.fetchall() def traverse(self): self.cursor.execute('SELECT * FROM rooms') return self.cursor.fetchall() def list_recent_entries(self, count=3): self.cursor.execute('SELECT * FROM rooms ORDER BY time DESC LIMIT ?', (count,)) return self.cursor.fetchall() def list_recent_entries_by_name_or_location_with_limit(self, n, name=None, location=None): query = 'SELECT * FROM rooms WHERE ' params = [] if name and location: query += 'name = ? AND location = ? ' params.extend([name, location]) elif name: query += 'name = ? ' params.append(name) elif location: query += 'location = ? ' params.append(location) else: return [] # No name or location provided, return empty list query += 'ORDER BY time DESC LIMIT ?' params.append(n) self.cursor.execute(query, params) return self.cursor.fetchall() def traverse_recent_entries_with_photo(self, n): self.cursor.execute(''' SELECT * FROM rooms WHERE photo_file_name != 'None' ORDER BY time DESC LIMIT ? ''', (n,)) return self.cursor.fetchall() def clean_database(self): self.cursor.execute('DELETE FROM rooms') self.conn.commit() def close(self): self.conn.close() log = logDB("ROOM.db") log.clean_database()

#train.py import cv2 import os import numpy as np from PIL import Image import time # Path for face image database path = '/home/pi/proj/dataset' recognizer = cv2.face.LBPHFaceRecognizer_create() detector = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml') # function to get the images and label data def getImagesAndLabels(path): imagePaths = [os.path.join(path,f) for f in os.listdir(path)] faceSamples=[] ids = [] for imagePath in imagePaths: PIL_img = Image.open(imagePath).convert('L') # convert it to grayscale img_numpy = np.array(PIL_img,'uint8') id = int(os.path.split(imagePath)[-1].split(".")[1]) faces = detector.detectMultiScale(img_numpy) for (x,y,w,h) in faces: faceSamples.append(img_numpy[y:y+h,x:x+w]) ids.append(id) return faceSamples,ids print ("\n [INFO] Training faces. It will take a few seconds. Wait ...") faces,ids = getImagesAndLabels(path) recognizer.train(faces, np.array(ids)) # Save the model into trainer/trainer.yml recognizer.write('trained_faces.yml') # recognizer.save() worked on Mac, but not on Pi # Print the numer of faces trained and end program print("\n [INFO] {0} faces trained. Exiting Program".format(len(np.unique(ids))))

#facerecog.py import cv2 import numpy as np import os class face_recog(): recognizer = cv2.face.LBPHFaceRecognizer_create() recognizer.read('trained_faces.yml') faceCascade = cv2.CascadeClassifier(cv2.data.haarcascades + 'haarcascade_frontalface_default.xml') image_path = '/home/pi/proj/my_photo.jpg' folder_path = '/home/pi/proj/photohistory' font = cv2.FONT_HERSHEY_SIMPLEX # names related to ids names = ['Unknown', 'Zidong'] # Initialize and start realtime video capture # Define min window size to be recognized as a face def __init__(self): return def recognize(self,threshold): cam = cv2.VideoCapture(0) cam.set(3, 640) # set video widht cam.set(4, 480) # set video height minW = 0.1*cam.get(3) minH = 0.1*cam.get(4) counter = {"Unknown": 0, "Zidong": 0} #iniciate id counter id = 0 frame = 0 code_run = True while code_run: ret, img =cam.read() gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY) faces = self.faceCascade.detectMultiScale( gray, scaleFactor = 1.1, minNeighbors = 3, minSize = (int(minW), int(minH)), ) for(x,y,w,h) in faces: cv2.rectangle(img, (x,y), (x+w,y+h), (0,255,0), 2) id, confidence = self.recognizer.predict(gray[y:y+h,x:x+w]) # Check if confidence is less than 100 ==> "0" is perfect match if (confidence < 100): name = self.names[id] counter[name] = counter[name] + 1 else: counter["Unknown"] = counter["Unknown"] + 1 k = cv2.waitKey(10) & 0xff # Press 'ESC' for exiting video if k == 27: break frame += 1 if frame >= 10 : code_run = False if (counter['Zidong']+counter['Unknown'] == 0) : return 'No Face' , "None" # no face else : existing_images = [int(filename.split('_')[1].split('.')[0]) for filename in os.listdir(self.folder_path) if filename.endswith('.jpg')] max_index = max(existing_images) if existing_images else 0 ret, frame = cam.read() image_path = os.path.join(self.folder_path, f"image_{max_index + 1}.jpg") cam.release() if counter['Unknown'] > threshold: cv2.imwrite(image_path, frame) return 'Unknown' , image_path elif counter["Zidong"] > threshold: cv2.imwrite(image_path, frame) return 'Zidong' , image_path #Unknown else: return 'No Face' , "None" # no face if __name__ == "__main__": face = face_recog() while True: a, b = face.recognize(2) print(a,b)

#shutdown.py import RPi.GPIO as GPIO import os GPIO.setmode(GPIO.BCM) GPIO.setup(27,GPIO.IN,pull_up_down=GPIO.PUD_UP) def shutdown(channel): GPIO.cleanup() os.system("sudo shutdown -h now") GPIO.add_event_detect(27, GPIO.FALLING, callback=shutdown, bouncetime=300) try: while True: pass except KeyboardInterrupt: GPIO.cleanup()